Background#

In a project I am working on we manage the AWS infrastructure with Terraform (Application Load Balancers, Elastic Kubernetes Service clusters, Security Groups, etc.). We also have one requirement; the Application Load Balancers (ALBs) need to be treated like pets, primarily because another team manages DNS records. This is why we cannot use Kubernetes controllers to dynamically manage the ALBs and update DNS records. Thus, the problem statement can be summarised as: how to manage AWS EKS clusters and ALBs with Terraform, and attach EKS nodes to ALB TargetGroups.

Connect nodes to ALBs using Terraform#

Our initial implementation used Terraform to attach the AWS AutoScalingGroups to TargetGroups.

When using self-managed node groups you can pass a list of target_group_arns to have any nodes part of the AutoScalingGroup to auto-register themselves as targets to the given TargetGroup ARNs. Nice and easy. Check the docs. We use the community AWS EKS Terraform module which supports this argument.

When using EKS managed node groups, the option to pass target_group_arns is not available, and EKS will dynamically generate AutoScalingGroups based on your EKS node group definitions. The side effect here is that the AutoScalingGroup IDs are not known until they have been created. When working with Terraform this becomes a problem. It requires you to run Terraform apply with the -target option to first provision the EKS node group before you can reference the AutoScalingGroup and attach it to a TargetGroup.

Here’s a lovely GitHub issue with more details: https://github.com/terraform-aws-modules/terraform-aws-eks/issues/1539

This really is the core source of the problem we wanted to address; how to use EKS managed node groups and not have a hacky solution. And the solution we chose was the AWS Load Balancer Controller.

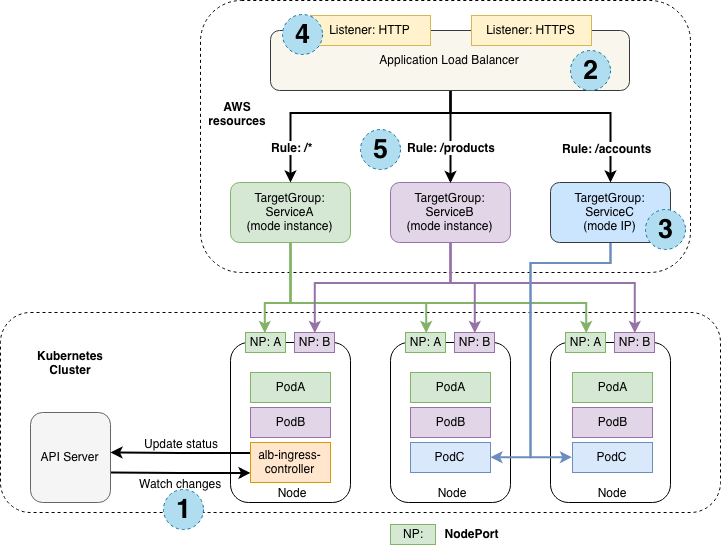

AWS Load Balancer Controller#

The AWS Load Balancer Controller is a Kubernetes controller that can manage the lifecycle of AWS Load Balancers, TargetGroups, Listeners (and Rules), and connect them with nodes (and pods) in your Kubernetes cluster. Check out the how it works page for details on the design.

Looking back at our use case, we want to use an existing Application Load Balancer that is managed by Terraform. If you search for this online, you will most certainly find another lovely GitHub issue: https://github.com/kubernetes-sigs/aws-load-balancer-controller/issues/228

It’s a long issue, with lots of suggestions. Personally, I was not concerned with how much of the infrastructure we manage with Terraform vs Kubernetes; the primary goal was a simple solution that did not require too much customisation, and I found the idea of the TargetGroupBinding Custom Resource Definition (CRD) quite appealing.

TargetGroupBinding Custom Resource Definition#

TargetGroupBinding is a CRD that the AWS Load Balancer Controller installs. If you follow the most common use case and manage your ALBs with the AWS Load Balancer controller, it will create TargetGroupBindings under the hood even if you do not interact with them directly. Good news; it is a core feature of the AWS LB Controller, not an extension for people with an edge case. That gave me some confidence.

It requires an existing TargetGroup and IAM policies to lookup and attach/detach targets to the TargetGroup. There’s a mention of the required IAM policies required here.

Here’s a sample TargetGroupBinding manifest taken from the docs:

1apiVersion: elbv2.k8s.aws/v1beta1

2kind: TargetGroupBinding

3metadata:

4 name: ingress-nginx-binding

5spec:

6 # The service we want to connect to.

7 # We use the Ingress Nginx Controller so let's point to that service.

8 # NOTE: it was necessary for this TargetGroupBinding to be in the same namespace as the service.

9 serviceRef:

10 name: ingress-nginx

11 port: 80

12 # NOTE: need the ARN of the TargetGroup... Bit of a PITA.

13 # Would be nice to use tags to look up the TargetGroup, for example.

14 targetGroupARN: arn:aws:elasticloadbalancing:eu-west-1:123456789012:targetgroup/eks-abcdef/73e2d6bc24d8a067

For our case, this means managing the ALBs, Listeners, Rules and TargetGroups with Terraform. The AWS Load Balancer Controller would only be responsible for attaching nodes to the specified TargetGroups. This sounds like a clean separation of concerns.

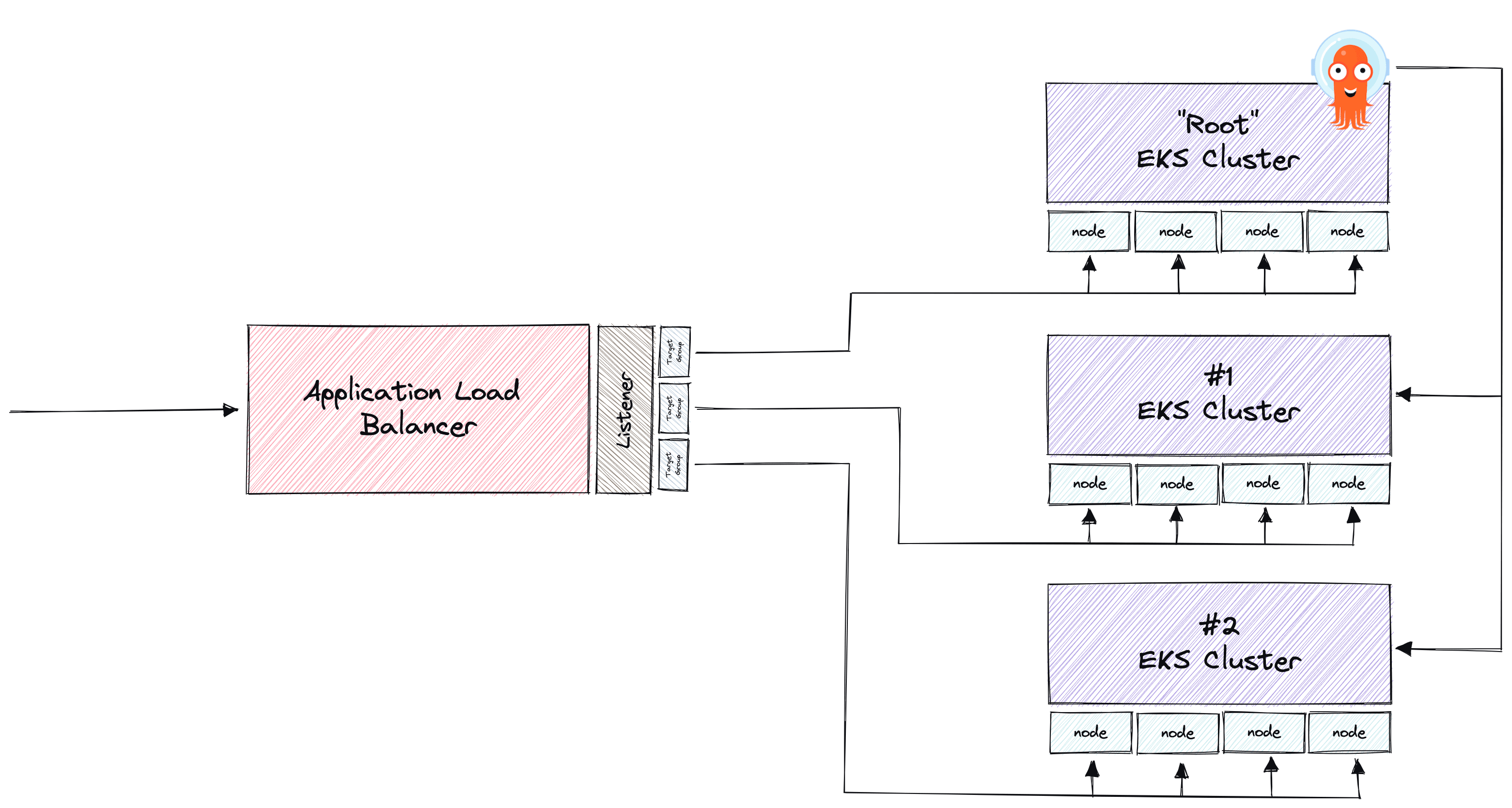

Multiple EKS clusters, same ALB#

Expanding on our particular use case, we manage multiple EKS clusters that share Application Load Balancers. We already use ArgoCD ApplicationSets to manage applications across clusters. We have a “root” cluster that runs core services, like ArgoCD, which connects to multiple other clusters. The below diagram is a high-level simplified illustration of the setup we want to achieve. It will be ArgoCD’s job to deploy the AWS Load Balancer Controller and TargetGroupBinding manifests to the different clusters.

Let’s look at how we implemented this with the AWS Load Balancer Controller next.

Implementation#

Terraform#

We use Terraform to manage (amongst other things) the EKS clusters, ALBs and TargetGroups. For implementing the AWS Load Balancer Controller all we needed to do was create the necessary IAM role that can be assumed by a Kubernetes ServiceAccount. The following snippet show this:

1#

2# Create IAM role policy granting the kubernetes service account AssumeRoleWithWebIdentity

3#

4data "aws_iam_policy_document" "aws_lb_controller" {

5 statement {

6 actions = ["sts:AssumeRoleWithWebIdentity"]

7

8 principals {

9 type = "Federated"

10 identifiers = [

11 "arn:aws:iam::${data.aws_caller_identity.current.account_id}:oidc-provider/${local.cluster_oidc_issuer}"

12 ]

13 }

14

15 condition {

16 test = "StringEquals"

17 variable = "${local.cluster_oidc_issuer}:sub"

18 values = ["system:serviceaccount:aws-lb-controller:aws-lb-controller"]

19 }

20 }

21}

22

23#

24# Create an AWS IAM role that will be assumed by our kubernetes service account

25#

26resource "aws_iam_role" "aws_lb_controller" {

27 name = "${local.cluster_name}-aws-lb-controller"

28 assume_role_policy = data.aws_iam_policy_document.aws_lb_controller.json

29 inline_policy {

30 name = "${local.cluster_name}-aws-lb-controller"

31 policy = jsonencode(

32 {

33 "Version" : "2012-10-17",

34 "Statement" : [

35 {

36 "Action" : [

37 "ec2:DescribeVpcs",

38 "ec2:DescribeSecurityGroups",

39 "ec2:DescribeInstances",

40 "elasticloadbalancing:DescribeTargetGroups",

41 "elasticloadbalancing:DescribeTargetHealth",

42 "elasticloadbalancing:ModifyTargetGroup",

43 "elasticloadbalancing:ModifyTargetGroupAttributes",

44 "elasticloadbalancing:RegisterTargets",

45 "elasticloadbalancing:DeregisterTargets"

46 ],

47 "Effect" : "Allow",

48 "Resource" : "*"

49 }

50 ]

51 }

52 )

53 }

54}

This has some dependencies so cannot be run “as is”. But if you need help configuring IAM Roles for Service Accounts (IRSA) then I already wrote a post on the topic which you can find here.

The TargetGroupBinding Kubernetes Custom Resource we need to create requires the TargetGroup ARN which is non deterministic. In our setup, we use Terraform to create the Kubernetes secret that informs ArgoCD about connected clusters. Within that secret we can attach additional labels that can be accessed by the ArgoCD ApplicationSets, which means we have a very primitive way of passing data from Terraform to ArgoCD without ay extra tools. Note that Kubernetes labels have some restrictions on the syntax and character set that can be used, so we can’t just pass in arbitrary data.

Here is how we create the Kubernetes secret that essentially “register” a cluster with ArgoCD, and how we pass the TargetGroup name and ID via labels.

1locals {

2 #

3 # Extract the target group name and ID to use in ArgoCD secret

4 #

5 aws_lb_controller = merge(coalesce(local.apps.aws_lb_controller, { enabled = false }), {

6 targetgroup_name = split("/", data.aws_lb_target_group.this.arn_suffix)[1]

7 targetgroup_id = split("/", data.aws_lb_target_group.this.arn_suffix)[2]

8 })

9}

10

11#

12# Get cluster TargetGroup ARNs

13#

14data "aws_lb_target_group" "this" {

15 name = "madeupname-${local.cluster_name}"

16}

17

18#

19# Create Kubernetes secret in root cluster where ArgoCD is running.

20#

21# The secret tells ArgoCD about a cluster and how to connect (e.g. credentials).

22#

23resource "kubernetes_secret" "argocd_cluster" {

24 provider = kubernetes.root

25

26 metadata {

27 name = "cluster-${local.cluster_name}"

28 namespace = "argocd"

29 labels = {

30 # Tell ArgoCD that this secret defines a new cluster

31 "argocd.argoproj.io/secret-type" = "cluster"

32 "environment" = var.environment

33 "aws-lb-controller/enabled" = local.aws_lb_controller.enabled

34 # Kubernetes labels do not allow ARN values, so pass the name and ID separately

35 "aws-lb-controller/targetgroup-name" = local.aws_lb_controller.targetgroup_name

36 "aws-lb-controller/targetgroup-id" = local.aws_lb_controller.targetgroup_id

37 ...

38 ...

39

40 }

41 }

42

43 data = {

44 name = local.cluster_name

45 server = data.aws_eks_cluster.this.endpoint

46 config = jsonencode({

47 awsAuthConfig = {

48 clusterName = data.aws_eks_cluster.this.id

49 # Provide the rolearn that was created for this cluster, and which the

50 # root ArgoCD role should be able to assume

51 roleARN = aws_iam_role.argocd_access.arn

52 }

53 tlsClientConfig = {

54 insecure = false

55 caData = data.aws_eks_cluster.this.certificate_authority.0.data

56 }

57 })

58 }

59

60 type = "Opaque"

61}

That’s it for the Terraform config. We use these snippets in Terraform modules that get called for each cluster we create, keeping things DRY.

ArgoCD#

Let’s first look at the directory structure and we can work through the relevant files (note that in our code this exists alongside many other applications inside a Git repository).

1.

2├── appset-aws-lb-controller.yaml

3├── appset-targetgroupbindings.yaml

4├── chart

5│ ├── Chart.yaml

6│ ├── README.md

7│ ├── templates

8│ │ └── targetgroupbindings.yaml

9│ └── values.yaml

10└── kustomization.yaml

11

122 directories, 7 files

We use Kustomize to connect our ArgoCD applications together (minimising the number of “app of apps” connections needed) and that’s what the kustomization.yaml file is for, and here it contains the two top-level appset-*.yaml files.

The appset-aws-lb-controller.yaml file contains the AWS Load Balancer Controller ApplicationSet which uses the Helm chart to install the controller on each of our clusters.

1# File: appset-aws-lb-controller.yaml

2apiVersion: argoproj.io/v1alpha1

3kind: ApplicationSet

4metadata:

5 name: aws-lb-controller

6 namespace: argocd

7spec:

8 generators:

9 # This is a little trick we use for nearly all our apps to control which apps

10 # should be installed on which clusters. Terraform creates the secret that

11 # contains these labels, so that's where the logic is controlled for setting

12 # these labels to true/false.

13 - clusters:

14 selector:

15 matchLabels:

16 aws-lb-controller/enabled: "true"

17 syncPolicy:

18 preserveResourcesOnDeletion: false

19 template:

20 metadata:

21 name: "aws-lb-controller-{{ name }}"

22 namespace: argocd

23 spec:

24 project: madeupname

25 destination:

26 name: "{{ name }}"

27 namespace: aws-lb-controller

28 syncPolicy:

29 automated:

30 prune: true

31 selfHeal: true

32 syncOptions:

33 - CreateNamespace=true

34 - PruneLast=true

35

36 source:

37 repoURL: "https://aws.github.io/eks-charts"

38 chart: aws-load-balancer-controller

39 targetRevision: 1.4.4

40 helm:

41 releaseName: aws-lb-controller

42 values: |

43 clusterName: {{ name }}

44 serviceAccount:

45 create: true

46 annotations:

47 "eks.amazonaws.com/role-arn": "arn:aws:iam::123456789012:role/{{ name }}-aws-lb-controller"

48 name: aws-lb-controller

49 # We won't be using ingresses with this controller.

50 createIngressClassResource: false

51 disableIngressClassAnnotation: true

52

53 resources:

54 limits:

55 cpu: 100m

56 memory: 128Mi

57 requests:

58 cpu: 100m

59 memory: 128Mi

Next up we have the appset-targetgroupbindings.yaml ApplicationSet which creates the TargetGroupBinding on each of our clusters. For this, we needed to template some values based on the cluster and the most straightforward way I have found with ArgoCD is to create a minimalistic Helm chart for our purpose. This is what the chart/ directory is, which contains a single template file targetgroupbindings.yaml.

Let’s first look at the ApplicationSet which installs the Helm Chart:

1# File: appset-targetgroupbindings.yaml

2apiVersion: argoproj.io/v1alpha1

3kind: ApplicationSet

4metadata:

5 name: targetgroupbindings

6 namespace: argocd

7spec:

8 generators:

9 - clusters:

10 selector:

11 matchLabels:

12 aws-lb-controller/enabled: "true"

13 syncPolicy:

14 preserveResourcesOnDeletion: false

15 template:

16 metadata:

17 name: "targetgroupbindings-{{ name }}"

18 namespace: argocd

19 spec:

20 project: madeupname

21 destination:

22 name: "{{ name }}"

23 # TargetGroupBindings need to be in the same namespace as the service

24 # they bind to

25 namespace: ingress-nginx

26 syncPolicy:

27 automated:

28 prune: true

29 selfHeal: true

30 syncOptions:

31 - CreateNamespace=true

32 - PruneLast=true

33

34 source:

35 repoURL: "<url-of-this-repo>"

36 path: "apps/aws-lb-controller/chart"

37 targetRevision: "master"

38 helm:

39 releaseName: targetgroupbinding

40 values: |

41 # AWS ARNs are not valid Kubernetes label values, so we had to split the ARN up and glue it back together here.

42 targetGroupArn: "arn:aws:elasticloadbalancing:eu-west-1:123456789012:targetgroup/{{ metadata.labels.aws-lb-controller/targetgroup-name }}/{{ metadata.labels.aws-lb-controller/targetgroup-id }}"

Next we can look at the single template in our minimal Helm chart:

1# File: targetgroupbindings.yaml

2apiVersion: elbv2.k8s.aws/v1beta1

3kind: TargetGroupBinding

4metadata:

5 name: ingress-nginx

6spec:

7 targetType: instance

8 serviceRef:

9 name: ingress-nginx-controller

10 port: 80

11 targetGroupARN: {{ required "targetGroupArn required" .Values.targetGroupArn }}

12 # By default, add all nodes to the cluster unless they have the label

13 # exclude-from-lb-targetgroups set

14 nodeSelector:

15 matchExpressions:

16 - key: exclude-from-lb-targetgroups

17 operator: DoesNotExist

It’s not an ideal situation to use Helm charts for this purpose, but the inspiration came from the ArgoCD app of apps example repository. Anyway, I am fairly happy with this implementation and it works dynamically for any new clusters that we add to ArgoCD.

Conclusion#

In this post we looked at a fairly specific problem; binding EKS cluster nodes to existing Application Load Balancers using the TargetGroupBinding CRD from the AWS Load Balancer Controller. The motivation to make this write-up came from the number of people asking about this on GitHub, and I think this is quite a simple and elegant approach.

A point worth noting is that using the AWS Load Balancer Controller decouples your node management with your cluster management. Let’s say we wanted to use Karpenter for autoscaling instead of the defacto cluster-autoscaler. Karpenter will not use AWS AutoScalingGroups but will instead create standalone EC2 instances based on the Provisioners you define. This means our previous approach of attaching AutoScalingGroups with TargetGroups will not work as the EC2 instances Karpenter manages will not belong to the AutoScalingGroup and therefore not be automatically attached to the TargetGroup. The AWS Load Balancer Controller doesn’t care how the nodes are created; only that they belong to the cluster and match the label selectors defined. Probably we will look into Karpenter again in the near future for our project now that it supports pod anti-affinity, as this was previously a blocker for us.

If you have any suggestions or questions about this post, please leave a comment or get in touch with us!